Understand at a glance what is happening with your DAGs and tasks, quickly pinpoint task failures, and drill down into root causes with Airflow’s intuitive grid view. Apache Airflow is a platform to programmatically author, schedule and monitor workflows.

#Airflow apache install

Pull the latest version of any Airflow Provider at anytime, or follow an easy contribution process to build your own and install it as a Python package.

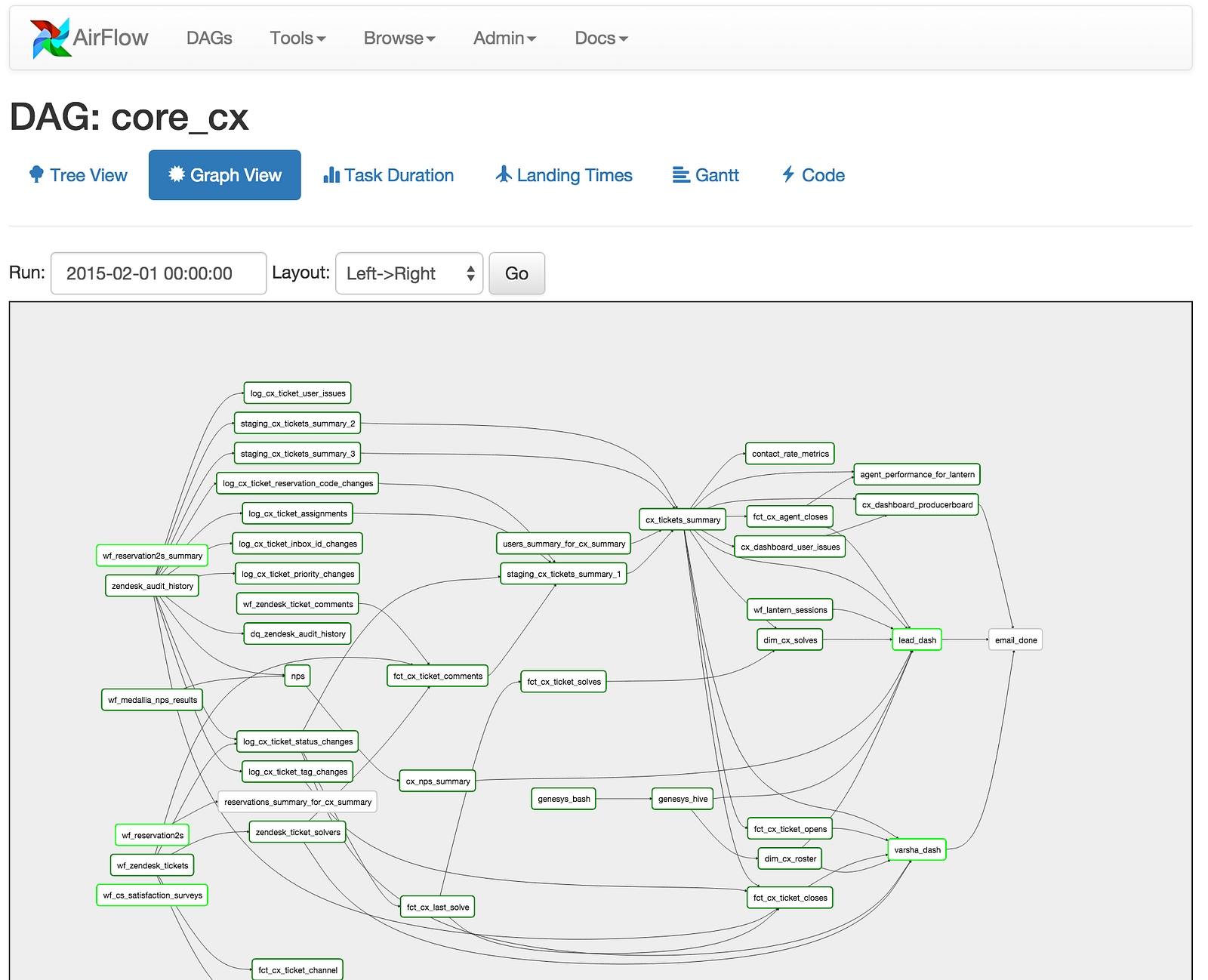

Make the most of the pod_override parameter for easy 1:1 overrides and the new yaml pod_template_file, which replaces configs set in airflow.cfg. Task Groups don't affect task execution behavior and do not limit parallelism. This means that pip install apache-airflow will not work from time to time or will produce unusable Airflow installation. Replace SubDAGs with a new way to group tasks in the Airflow UI. Includes support for custom XCom backends. Use Airflow to author workflows as directed acyclic graphs (DAGs) of tasks. When workflows are defined as code, they become more maintainable, versionable, testable, and collaborative. Airflow overcomes some of the limitations of the cron utility by. Apache Airflow (or simply Airflow) is a platform to programmatically author, schedule, and monitor workflows. The entire workflow’s definition and execution are done in one place. Apache Airflow is an open source platform used to author, schedule, and monitor workflows.

#Airflow apache code

Pass information between tasks with clean, efficient code that's abstracted from the task dependency layer. Apache Airflow Unlike AWS Step Functions, which has its own language for defining workflows, Apache Airflow is built using Python code with each task being represented in logical sequence by a Python class. Leverage dynamic tasks, sensors, and deferrable operators to create robust, event-driven workflows. Chain dynamic tasks together to simplify and accelerate ETL and ELT processing. Spin up as many parallel tasks as you need at runtime in response to the outputs of upstream tasks.

Task T1 must be executed first and then T2, T3, and T4. These are the nodes and directed edges are the arrows as we can see in the above diagram corresponding to the dependencies between your tasks. For Example: This is either a data pipeline or a DAG. Read more about the Airflow 2 scheduler.īuild programmatic services around your Airflow environment with Airflow's new API, now featuring a robust permissions framework.Įasily accommodate long-running tasks with deferrable operators and triggers that run tasks asynchronously, freeing up worker slots and making efficient use of resources. An Apache Airflow DAG is a data pipeline in airflow. Apache Airflow is a way to programmatically author, schedule and monitor your data pipelines using Python. Many customers looking at modernizing their pipeline orchestration have turned to Apache Airflow, a flexible and scalable workflow manager for data. Launch Scheduler replicas to increase task throughput and ensure high-availability. The open source standard for workflow orchestration. Expect faster performance with near-zero task latency.

0 kommentar(er)

0 kommentar(er)